Clik here to view.

Image may be NSFW.

Clik here to view. Percona Kubernetes Operators support various options for storage: Persistent Volume (PV), hostPath, ephemeral storage, etc. In most of the cases, PVs are used, which are provisioned by the Operator through Storage Classes and Persistent Volume Claims.

Percona Kubernetes Operators support various options for storage: Persistent Volume (PV), hostPath, ephemeral storage, etc. In most of the cases, PVs are used, which are provisioned by the Operator through Storage Classes and Persistent Volume Claims.

Storage Classes define the underlying volume type that should be used (ex. AWS, EBS, gp2, or io1), file system (xfs, ext4), and permissions. In various cases, cluster administrators want to change the Storage Class for already existing volumes:

- DB cluster is underutilized and it is a good cost-saving when switching from io1 to gp2

- The other way – DB cluster is saturated on IO and it is required to upsize the volumes

- Switch the file system for better performance (MongoDB is much better with xfs)

In this blog post, we will show what the best way is to change the Storage Class with Percona Operators and not introduce downtime to the database. We will cover the change in the Storage Class, but not the migration from PVC to other storage types, like hostPath.

Changing Storage Class on Kubernetes

Prerequisites:

- GKE cluster

- Percona XtraDB Cluster (PXC) deployed with Percona Operator. See instructions here.

Goal:

- Change the storage from pd-standard to pd-ssd without downtime for PXC.

Planning

The steps we are going to take are the following:

- Create a new Storage class for pd-ssd volumes

- Change the

storageClassName

in Custom Resource (CR) - Change the

storageClassName

in the StatefulSet - Scale up the cluster (optional, to avoid performance degradation)

- Reprovision the Pods one by one to change the storage

- Scale down the cluster

Register for Percona Live ONLINE

A Virtual Event about Open Source Databases

Execution

Create the Storage Class

By default, standard Storage Class is already present in GKE, we need to create the new

StorageClassfor ssd:

$ cat pd-ssd.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ssd provisioner: kubernetes.io/gce-pd parameters: type: pd-ssd volumeBindingMode: Immediate reclaimPolicy: Delete $ kubectl apply -f pd-ssd.yaml

The new Storage Class will be called

ssdand will provision the volumes of

type: pd-ssd.

Change the storageClassName in Custom Resource

We need to change the configuration for Persistent Volume Claims in our Custom Resource. The variable we look for is

storageClassNameand it is located under

spec.pxc.volumeSpec.persistentVolumeClaim.

spec: pxc: volumeSpec: persistentVolumeClaim: - storageClassName: standard + storageClassName: ssd

Now apply new cr.yaml:

$ kubectl apply -f deploy/cr.yaml

Change the storageClassName in the StatefulSet

StatefulSets are almost immutable and when you try to edit the object you get the warning:

# * spec: Forbidden: updates to statefulset spec for fields other than 'replicas', 'template', and 'updateStrategy' are forbidden

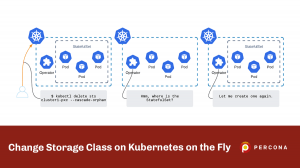

We will rely on the fact that the operator controls the StatefulSet. If we delete it, the Operator is going to recreate it with the last applied configuration. For us, it means – with the new storage class. But the deletion of the StatefulSet leads to Pods termination, but our goal is 0 downtime. To get there, we will delete the set, but keep the Pods running. It can be done with –cascade flag:

$ kubectl delete sts cluster1-pxc --cascade=orphan

As a result, the Pods are up and the Operator recreated the StatefulSet with new storageClass:

$ kubectl get sts | grep cluster1-pxc cluster1-pxc 3/3 8s

Image may be NSFW.

Clik here to view.

Scale up the Cluster (Optional)

Changing the storage type would require us to terminate the Pods, which decreases the computational power of the cluster and might cause performance issues. To improve performance during the operation we are going to changing the size of the cluster from 3 to 5 nodes:

spec: pxc: - size: 3 + size: 5 $ kubectl apply -f deploy/cr.yaml

As long as we have changed the StatefulSet already, new PXC Pods will be provisioned with the volumes backed by the new

StorageClass:

$ kubectl get pvc datadir-cluster1-pxc-0 Bound pvc-6476a94c-fa1b-45fe-b87e-c884f47bd328 6Gi RWO standard 78m datadir-cluster1-pxc-1 Bound pvc-fcfdeb71-2f75-4c36-9d86-8c68e508da75 6Gi RWO standard 76m datadir-cluster1-pxc-2 Bound pvc-08b12c30-a32d-46a8-abf1-59f2903c2a9e 6Gi RWO standard 64m datadir-cluster1-pxc-3 Bound pvc-b96f786e-35d6-46fb-8786-e07f3097da02 6Gi RWO ssd 69m datadir-cluster1-pxc-4 Bound pvc-84b55c3f-a038-4a38-98de-061868fd207d 6Gi RWO ssd 68m

Reprovision the Pods One by One to Change the Storage

This is the step where underlying storage is going to be changed for the database Pods.

Delete the PVC of the Pod that you are going to reprovision. Like for Pod

cluster1-pxc-2the PVC is called

datadir-cluster1-pxc-2:

$ kubectl delete pvc datadir-cluster1-pxc-2

The PVC will not be deleted right away as there is a Pod using it. To proceed, delete the Pod:

$ kubectl delete pod cluster1-pxc-2

The Pod will be deleted along with the PVCs. The StatefulSet controller will notice that the pod is gone and will recreate it along with the new PVC of a new Storage Class:

$ kubectl get pods ... cluster1-pxc-2 0/3 Init:0/1 0 7s

$ kubectl get pvc datadir-cluster1-pxc-2 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE … datadir-cluster1-pxc-2 Bound pvc-08b12c30-a32d-46a8-abf1-59f2903c2a9e 6Gi RWO ssd 10s

The STORAGECLASS column indicates that this PVC is of type ssd.

You might face the situation that the Pod is stuck in a pending state with the following error:

Warning FailedScheduling 63s (x3 over 71s) default-scheduler persistentvolumeclaim "datadir-cluster1-pxc-2" not found

It can happen due to the race condition: Pod was created when old PVC was terminating, and when the Pod is ready to start the PVC is already gone. Just delete the Pod again, so that the PVC is recreated.

Once the Pod is up, the State Snapshot Transfer kicks in and the data is synced from other nodes. It might take a while if your cluster holds lots of data and is heavily utilized. Please wait till the node is fully up and running, sync is finished, and only then proceed to the next Pod.

Scale Down the Cluster

Once all the Pods are running on the new storage it is time to scale down the cluster (if it was scaled up):

spec:

pxc:

- size: 5

+ size: 3

$ kubectl apply -f deploy/cr.yamlDo not forget to clean up the PVCs for nodes 4 and 5. And done!

Conclusion

Changing the storage type is a simple task on the public clouds, but its simplicity is not synchronized yet with Kubernetes capabilities. Kubernetes and Container Native landscape is evolving and we hope to see this functionality soon. The way of changing Storage Class described in this blog post can be applied to both the Percona Operator for PXC and Percona Operator for MongoDB. If you have ideas on how to automate this and are willing to collaborate with us on the implementation, please submit the Issue to our public roadmap on Github.